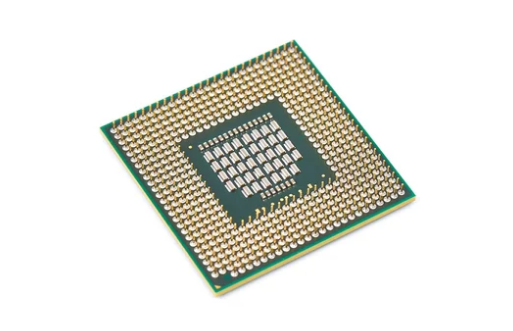

The Central Processing Unit (CPU) has undergone one of the most remarkable transformations in the history of technology. What began as a modest chip capable of handling basic instructions has evolved into a multi-core powerhouse that fuels everything from smartphones to AI supercomputers.

This article walks you through the evolution of the CPU—from early single-core processors to today’s multi-core monsters—and explores how this transformation has reshaped computing as we know it.

The Birth of the CPU: Simple and Single-Core

The first commercial CPU, the Intel 4004, was released in 1971. It was groundbreaking for its time, with a 4-bit architecture and the ability to perform about 92,000 instructions per second. But make no mistake—it was a single-core processor, meaning it could handle only one task at a time.

Key features of early CPUs:

- One core

- Limited clock speeds (measured in kilohertz or low megahertz)

- Tiny instruction sets

- Used in calculators and early computers

These early CPUs laid the foundation for everything we have today but were severely limited in performance and functionality by today’s standards.

The Rise of the Megahertz Era

By the 1980s and 1990s, CPUs had become more sophisticated. Processors like the Intel 8086, Pentium, and AMD K5 increased processing speeds to the megahertz range and introduced features like pipelines, caches, and floating-point units.

These developments allowed for:

- Faster data processing

- More efficient instruction handling

- Better multitasking (still limited by single-core architecture)

However, even with performance improvements, CPUs were still limited to a single core, and that core could only do one thing at a time.

The Gigahertz Race: Hitting the Speed Limit

In the late 1990s and early 2000s, CPU manufacturers engaged in what’s often referred to as the “gigahertz race.” Intel and AMD competed to release CPUs with faster clock speeds—hitting the 1 GHz milestone around the year 2000.

But with increasing speed came problems:

- Excessive heat

- Higher power consumption

- Diminishing returns in real-world performance

Manufacturers realized that simply increasing clock speed was no longer sustainable. It was time to rethink how CPUs handled workloads.

The Multi-Core Revolution Begins

The solution? Multiple cores.

Instead of making one core faster, CPU designers started putting multiple cores on a single chip. This allowed the processor to handle several tasks at once, dramatically improving performance without needing to raise clock speeds.

Key Milestones:

- 2005: Intel Pentium D – First dual-core processor from Intel.

- 2006: AMD Athlon 64 X2 – A strong competitor in the dual-core space.

- 2007–2010: Quad-core CPUs become common in consumer desktops.

With multi-core CPUs, you could browse the web, run antivirus software, and listen to music all at the same time without lag. Software also started evolving to take advantage of multiple cores, especially in gaming, video editing, and 3D rendering.

Modern CPUs: Multi-Core Monsters

Today’s CPUs are no longer just dual- or quad-core. We now have:

- 6-core, 8-core, 12-core, and even 16-core processors for desktops and laptops

- Threadripper and Xeon processors with 32, 64, or more cores for workstations and servers

- Smartphone CPUs with big.LITTLE architecture that combine performance and efficiency cores

What makes modern multi-core CPUs so powerful?

- Parallel processing: Cores can run multiple threads simultaneously.

- Hyper-threading (Intel) / Simultaneous Multithreading (AMD): Each core can handle two threads, doubling task handling.

- Integrated GPUs and AI engines: CPUs now include more specialized components for media, graphics, and machine learning.

- Power management: Intelligent systems that balance performance with battery life and heat output.

The result? Fast, efficient, and smart processors that adapt to your needs in real-time.

CPUs in the Age of AI and Cloud Computing

With the rise of cloud computing, machine learning, and big data, the demands on CPUs have changed.

In response, modern CPUs now offer:

- Dedicated AI acceleration: For faster neural network inference.

- Support for cloud scalability: CPUs in servers are optimized for virtualization and cloud-native workloads.

- Chiplet architecture: AMD’s Ryzen and EPYC processors separate cores and other parts into individual “chiplets” for better scalability and cost efficiency.

Moreover, heterogeneous computing is becoming the norm. Instead of relying solely on CPUs, modern systems combine CPUs, GPUs, and other processors (like NPUs and TPUs) to deliver optimal performance.

The Future of CPUs: What’s Next?

The evolution is far from over. Here’s what’s on the horizon:

- 3nm and smaller fabrication: More transistors in less space = better performance and energy efficiency.

- Quantum processors: Still in early development, but could revolutionize computing entirely.

- ARM-based CPUs: Apple’s M-series chips have shown that ARM can rival x86 in both performance and battery life.

- AI-optimized processors: CPUs are being redesigned with built-in support for AI workloads and predictive processing.

The future CPU won’t just be faster—it will be smarter, more efficient, and more specialized than ever before.

Why Multi-Core Matters to You

You may be wondering: Do I really need all these cores? The answer depends on what you do.

- Everyday users: Quad-core or 6-core CPUs are usually sufficient.

- Gamers: Modern games benefit from 6 to 12 cores.

- Creators: Video editors, 3D designers, and musicians should look for 8+ cores with high clock speeds.

- Developers and engineers: 16+ core processors help with compiling, rendering, and virtualization.

- Enterprise and AI users: High-core-count CPUs are essential for data-heavy tasks and AI training.

Knowing how CPU cores have evolved helps you make smarter buying decisions and understand the true potential of your devices.

Final Thoughts: From Simplicity to Superpower

The evolution of the CPU is a journey from simple, single-task chips to complex, multi-core engines capable of handling the world’s most demanding workloads.

In just a few decades, CPUs have gone from running calculators to powering artificial intelligence, from 1 core to 64+ cores, and from megahertz to multi-threaded gigahertz monsters.

Whether you’re a casual user or a tech enthusiast, the CPU inside your device is a marvel of engineering—a direct result of decades of innovation, competition, and imagination.

Also Read :

- The History of CPUs: From Intel 4004 to Today’s Powerhouses

- Why the CPU Matters More Than You Think

- CPU Architecture Explained: x86, ARM, and Beyond

- What Makes a CPU Fast? Understanding Clock Speed, Cores, and Threads

- The Evolution of the CPU: From Single Core to Multi-Core Monsters